How to call an asset

This guide will walk you through the process of calling your deployed models, pipelines, and agents using the aiXplain SDK.

- Models

- Pipelines

- Agents

Models

The aiXplain SDK allows you to run models synchronously (Python) or asynchronously (Python and Swift). You can also process Data assets (Python).

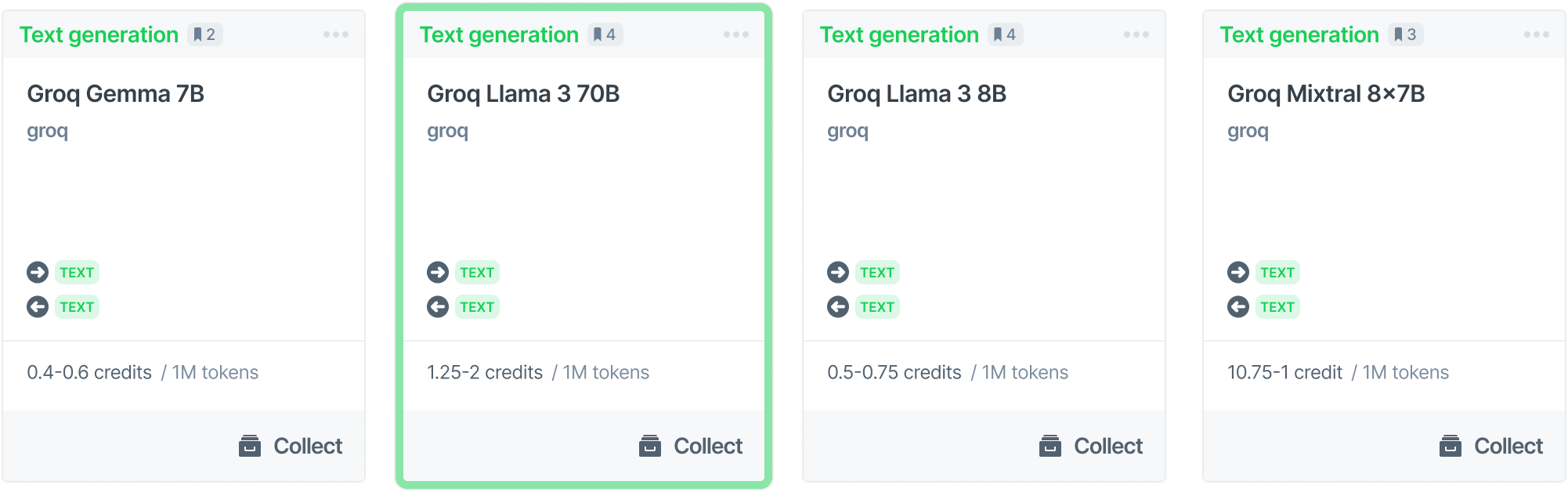

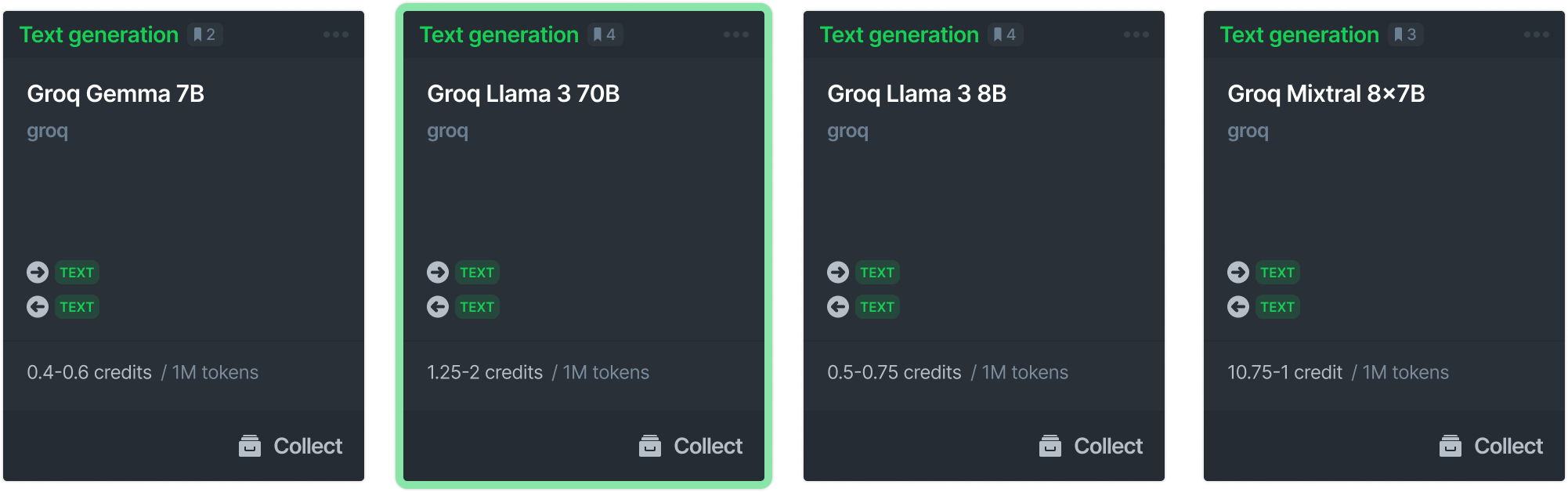

Let's use Groq's Llama 70B as an example.

- Python

- Swift

from aixplain.factories import ModelFactory

from aixplain.enums import Supplier

model_list = ModelFactory.list(suppliers=Supplier.GROQ)["results"]

for model in model_list:

print(model.__dict__)

model = ModelFactory.get("6626a3a8c8f1d089790cf5a2")

let provider = ModelProvider()

Task {

let query = ModelQuery(query: "groq")

let result = try? await provider.list(query)

result?.forEach {

print($0.id, $0.name)

}

}

let model = ModelProvider().get("6626a3a8c8f1d089790cf5a2")

Model (and pipeline) inputs can be URLs, file paths, or direct text/labels (if applicable).

The examples below use only direct text.

Synchronous

- Python

model.run("Tell me a joke about dogs.")

Use a dictionary to specify additional parameters or if the model takes multiple inputs.

model.run(

{

"text": "Tell me a joke about dogs.",

"max_tokens": 10,

"temperature": 0.5,

}

)

Asynchronous

- Python

- Swift

start_response = model.run_async("Tell me a joke about dogs.")

start_response

Use the poll method to monitor inference progress.

while True:

status = model.poll(start_response['url'])

print(status)

if status['status'] != 'IN_PROGRESS':

break

time.sleep(1) # wait for 1 second before checking again

let result = model.run("Tell me a joke about dogs.")

print(result)

Use a dictionary to specify additional parameters or if the model takes multiple inputs.

let result = try await model.run({

"text": "Tell me a joke about dogs.",

"max_tokens": 10,

"temperature": 0.5

})

print(result)

Process Data Assets

You can also perform inference on Data assets (Corpora or Datasets). You will need to onboard a data asset to use it.

Inference on Data assets is only available in Python.

Each data asset has an ID, and each column in that data asset has an ID, too. Specify both to perform inference:

Pipelines

The aiXplain SDK allows you to run pipelines synchronously (Python) or asynchronously (Python, Swift). You can also choose between two running modes (Python) and process Data assets (Python).

Pipeline (and model) inputs can be URLs, file paths, or direct text/labels (if applicable).

The examples below use only direct text.

Synchronous

- Python

result = pipeline.run("This is a sample text")

For multi-input pipelines, you can specify as input a dictionary where the keys are the label names of the input nodes (as seen in design) and values are their corresponding content:

result = pipeline.run({

"Input 1": "This is a sample text to input node 1.",

"Input 2": "This is a sample text to input node 2."

})

# or

result = pipeline.run(data = {

"Input 1": "This is a sample text to input node 1.",

"Input 2": "This is a sample text to input node 2."

})

Asynchronous

- Python

- Swift

start_response = pipeline.run_async({

"Input 1": "This is a sample text to input node 1.",

"Input 2": "This is a sample text to input node 2."

})

Use the poll method to monitor inference progress.

while True:

status = pipeline.poll(start_response['url'])

print(status)

if status['status'] != 'IN_PROGRESS':

break

time.sleep(1) # wait for 1 second before checking again

let result = try await pipeline.run({

"Input 1": "This is a sample text to input node 1.",

"Input 2": "This is a sample text to input node 2."

})

Running Modes

You can run pipeline in 2 modes

- Service mode: For short/quick (seconds to few minutes) pipelines where pods are always on. The pipeline executes instantly without any warmup time in this case.

- Batch mode: For longer running pipelines (minutes to hours) where the pipeline gets dedicated resources. This adds to a warmup time before the pipeline execution.

You may control the mode by passing the argument as follows.

- Python

result = pipeline.run(data={

"Input 1": "This is a sample text to input node 1.",

"Input 2": "This is a sample text to input node 2."

},

**{"batchmode": False})

Process Data Assets

You can also perform inference on aiXplain Data assets (Corpora or Datasets). You will need to onboard a data asset to use it.

Inference on Data assets is only available in Python.

Each data asset has an ID, and each column in that data asset has an ID, too. Specify both to perform inference:

Agents

The aiXplain SDK allows you to run agents and gives you the option to use short-term memory (history).

Invoking Agents

Use the run method to call an agent.

- Python

- Swift

agent_response = agent.run(

"Analyse the following text: The quick brown fox jumped over the lazy dog. Answer in text and audio."

)

display(agent_response)

You can use the regular expression module re to retrieve the URL by searching for the "https://" pattern in the output.

import re

def extract_url(text):

url_pattern = r'https://[^\s]+'

match = re.search(url_pattern, text)

if match:

return match.group(0)

return None

You can make a HTTP requst to retrieve your output, then use the IPython Audio and display to display it.

from IPython.display import Audio, display

import requests

def download_and_display_audio(url):

response = requests.get(url, stream=True)

if response.status_code == 200:

file_name = "downloaded_audio.mp3"

with open(file_name, 'wb') as f:

f.write(response.content)

display(Audio(file_name))

else:

print(f"Failed to download file: {response.status_code}")

output_text = agent_response['data']['output']

print("output_text:", output_text)

url = extract_url(output_text)

print("url:", url)

download_and_display_audio(url)

Call and run your agents using the code below.

let agent = try await AgentProvider().get("<AGENT ID>")

let response = try await agent.run(input: "Analyse the following text: The quick brown fox jumped over the lazy dog. Answer in text and audio.")

print(respose)

let output_text = response.data.output

print("output_text:", output_text)

Invoking Agents with Short-Term Memory

Every agent response includes a session_id value, which corresponds to a history.

To continue using a history, specify the session_id from the first query as an input parameter for all subsequent queries.

- Python

- Swift

session_id = agent_response["data"]["session_id"]

print(f"Session id: {session_id}")

agent_response = agent.run(

"What makes this text interesting?",

session_id=session_id,

)

display(agent_response)

let session_id = agent_response.data.sessionID

agent_response = agent.run(

"What makes this text interesting?",

sessionID=session_id,

)

print(agent_response)

With these steps, you can easily call models, pipelines, and integrate them into your agents to perform AI tasks.